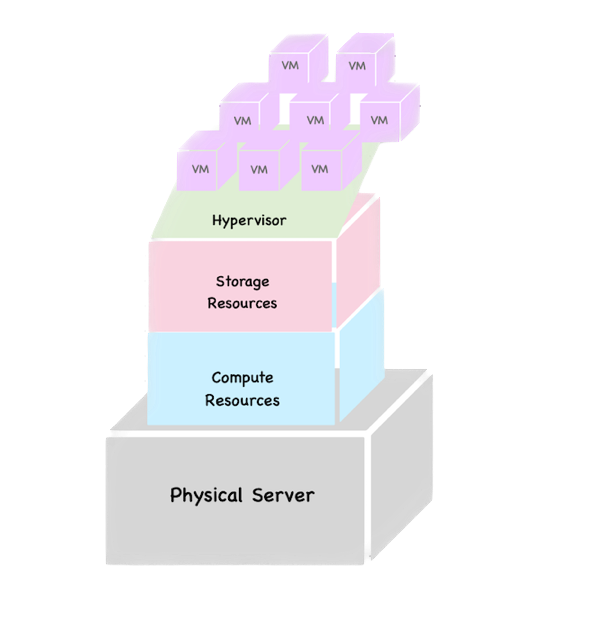

Virtualization

The technology that lies at the core of all cloud operations is virtualization. Virtualization lets you divide the hardware resources of a single physical server into smaller units. That physical server could therefore host multiple virtual machines (VMs) running their own complete operating systems, each with its own memory, storage, and network access.

Cloud Computing

Major cloud providers like AWS have enormous server farms where hundreds of thousands of servers and disk drives are maintained along with the network cabling necessary to connect them. A well-built virtualized environment could provide a virtual server using storage, memory, compute cycles, and network bandwidth collected from the most efficient mix of available sources it can find. A cloud computing platform offers on-demand, self-service access to pooled compute resources where your usage is metered and billed according to the volume you consume. Cloud computing systems allow for precise billing models, sometimes involving fractions of a penny for an hour of consumption.

AWS Global Infrastructure

At the time of writing this note, The AWS Cloud spans ⬇️

- 102 Availability Zones

- within 32 geographic regions around the world

- 400+ Edge Locations and 13 Regional Edge Caches

Availability Zones / Data Centers

An Availability Zone (AZ) is a data center. AZ is one or more discrete data centers with redundant power, networking, and connectivity in an AWS Region.

All AZs in an AWS Region are interconnected with high-bandwidth, low-latency networking, over fully redundant, dedicated metro fiber providing high-throughput, low-latency networking between AZs. All traffic between AZs is encrypted.

If an application is partitioned across AZs, companies are better isolated and protected from issues such as

- power outages,

- lightning strikes,

- tornadoes,

- earthquakes,

- and more.

AZs are physically separated by a meaningful distance, many kilometers, from any other AZ, although all are within 100 km (60 miles) of each other.

Regions

A region is a geographical area. Each Region consists of 2 (or more) Availability Zones.

Edge Networking

Edge networking in AWS refers to the concept of distributing content, applications, and services closer to end-users or devices. This approach aims to reduce latency and improve performance by minimizing the distance data needs to travel. AWS offers several services and features that support edge networking, such as

- Amazon CloudFront,

- AWS Global Accelerator,

- Amazon Route 53.

These services reside at AWS’ global edge locations connected by dedicated 100Gbps redundant fiber .

Amazon CloudFront

This is a content delivery network (CDN) service that caches and delivers content, including

- web pages,

- images,

- videos,

- other assets

from multiple edge locations around the world. It helps ensure that users can access content with minimal delay, regardless of their geographical location.

AWS Global Accelerator

This service allows you to direct traffic over the AWS global network infrastructure, dynamically routing requests to the nearest and most optimal AWS endpoint. It improves the availability and performance of applications by leveraging Anycast routing.

In Anycast routing, multiple servers or network nodes are configured with the same IP address. When a user sends a request to that IP address, the network routes the request to the nearest available node using routing protocols and metrics such as network topology, latency, and other factors.

Amazon Route 53

AWS’s domain name system (DNS) service, Route 53, can be used to route traffic based on geographic location, latency, health, or other routing policies. This helps optimize the distribution of traffic and ensures efficient access to resources.

In fact, it focuses on four distinct areas:

- Domain registration DNS management

- Availability monitoring (health checks)

- Traffic management (routing policies)

Route 53 now also provides an Application Recovery Controller through which you can configure recovery groups, readiness checks, and routing control.

Security and Identity

Identity and Access Management (IAM)

You use IAM to administer user and programmatic access and authentication to your AWS account. Through the use of users, groups, roles, and policies, you can control exactly who and what can access and/or work with any of your AWS resources.

Key Management Service (KMS)

KMS is a managed service that allows you to administrate the creation and use of encryption keys to secure data used by and for any of your AWS resources.

Directory Service

For AWS environments that need to manage identities and relationships, Directory Service can integrate AWS resources with identity providers like Amazon Cognito and Microsoft AD domains.

Compute

EC2

The central focus within a conventional datacenter or server room revolves around its invaluable servers. However, to harness the utility of these servers, one must incorporate elements such as racks, power supplies, cabling, switches, firewalls, and cooling systems. Amazon Web Services’ (AWS) Elastic Compute Cloud (EC2) is meticulously crafted to faithfully emulate the experience of a datacenter or server room. At its core lies the EC2 virtual server, referred to as an “instance.” Yet, akin to the scenario of a local server room described earlier, AWS offers an array of services meticulously designed to bolster and refine the operations of your workloads. These encompass tools for overseeing and managing resources, alongside purpose-built platforms catering to the coordination of containers.

Lambda

At its core, AWS Lambda allows developers to encapsulate discrete pieces of functionality into self-contained units known as functions. These functions can be triggered by a multitude of events, ranging from HTTP requests and changes to data stored in Amazon S3, to updates in databases and modifications in the AWS Management Console. Each function executes in a completely isolated environment, with its own compute resources and dependencies, ensuring optimal performance and scalability.

Elastic Beanstalk

Beanstalk is a managed service that abstracts the provi- sioning of AWS compute and networking infrastructure. You are required to do nothing more than push your application code, and Beanstalk automatically launches and manages all the necessary services in the background.

Auto Scaling

Copies of running EC2 instances can be defined as image templates and automatically launched (or scaled up) when client demand can’t be met by existing instances. As demand drops, unused instances can be terminated (or scaled down).

Elastic Load Balancing

Incoming network traffic can be directed between multiple web servers to ensure that a single web server isn’t overwhelmed while other servers are underused or that traffic isn’t directed to failed servers.

Elastic Container Service

Compute workloads using container technologies like Docker and Kubernetes can be provisioned, automated, deployed, and administered using full integration with your other AWS account resources. Kubernetes workloads have their own environment: Amazon Elastic Kubernetes Service (EKS).

Storage

S3

S3 offers highly versatile, reliable, and inexpensive object storage that’s great for data storage and backups. It’s also commonly used as part of larger AWS production processes, including through the storage of script, template, and log files.

S3 Glacier

A good choice for when you need large data archives stored cheaply over the long term and can live with retrieval delays measuring in the hours. Glacier’s life cycle management is closely integrated with S3.

EBS

EBS provides the persistent virtual storage drives that host the operating systems and working data of an EC2 instance. They’re meant to mimic the function of the storage drives and partitions attached to physical servers.

EBS-Provisioned IOPS SSD

If your applications will require intense rates of I/O operations, then you should consider provisioned IOPS. There are currently three flavors of provisioned IOPS volumes: io1, io2, and io2 Block Express. io1 can deliver up to 50 IOPS/GB to a limit of 64,000 IOPS (when attached to an AWS Nitro–compliant EC2 instance) with a maximum throughput of 1,000 MB/s per volume. io2 can provide up to 500 IOPS/GB. And io2 Block Express can give you 4,000 MB/s throughput and 256,000 IOPS/volume.

EBS General-Purpose SSD

For most regular server workloads that, ideally, deliver low-latency performance, general- purpose SSDs will work well. You’ll get a maximum of 3,000 IOPS/volume. For refer- ence, assuming 3,000 IOPS per volume and a single daily snapshot, a general-purpose SSD used as a typical 8 GB boot drive for a Linux instance would, at current rates, cost you $3.29/month.

HDD Volumes

For large data stores where quick access isn’t important, you can find cost savings using older spinning hard drive technologies. The sc1 volume type provides the lowest price of any EBS storage ($0.015/GB-month). Throughput optimized hard drive volumes (st1) are available for larger stores where infrequent bursts of access at a rate of 250 MB/s per TB are sufficient. st1 volumes cost $0.045/GB per month. The EBS Create Volume dialog box cur- rently offers a Magnetic (Standard) volume type.

EFS

The Elastic File System (EFS) provides automatically scalable and shareable file storage to be accessed from Linux instances. EFS-based files are designed to be accessed from within a virtual private cloud (VPC) via Network File System (NFS) mounts on EC2 Linux instances or from your on-premises servers through AWS Direct Connect connections. The goal is to make it easy to enable secure, low-latency, and durable file sharing among multiple instances.

FSx

Amazon FSx comes in four flavors: FSx for Lustre, FSx for Windows File Server, FSx for OpenZFS, and FSx for NetApp ONTAP. Lustre is an open source distributed filesystem built to give Linux clusters access to high-performance filesystems for use in compute-intensive operations. As with Lustre, Amazon’s FSx service brings OpenZFS and NetApp filesystem capabilities to your AWS infrastructure. FSx for Windows File Server, as you can tell from the name, offers the kind of file-sharing service EFS provides but for Windows servers rather than Linux. FSx for Windows File Server integrates operations with Server Message Block (SMB), NTFS, and Microsoft Active Directory.

Storage Gateway

Storage Gateway is a hybrid storage system that exposes AWS cloud storage as a local, on-premises appliance. Storage Gateway can be a great tool for migration and data backup and as part of disaster recovery operations.

AWS Snow Family

Migrating large data sets to the cloud over a normal Internet connection can sometimes require far too much time and bandwidth to be practical. If you’re looking to move terabyte- or even petabyte-scaled data for backup or active use within AWS, ordering a Snow device might be the best option. When requested, AWS will ship you a physical, appropriately sized device to which you transfer your data. When you’re ready, you can then send the device back to Amazon. Amazon will then transfer your data to buckets on S3.

AWS DataSync

DataSync specializes in moving on-premises data stores into your AWS account with a minimum of fuss. It works over your regular Internet connection, so it’s not as useful as Snowball for really large data sets. But it is much more flexible, since you’re not limited to S3 (or RDS as you are with AWS Database Migration Service). Using DataSync, you can drop your data into any service within your AWS account. That means you can do the following: Quickly and securely move old data out of your expensive datacenter into cheaper S3 or Glacier storage. Transfer data sets directly into S3, EFS, or FSx, where it can be processed and analyzed by your EC2 instances. Apply the power of any AWS service to any class of data as part of an easy-to-configure automated system. A single DataSync task can handle external transfer rates of up to 10 Gbps (assuming your connection has that capacity) and offers both encryption and data validation.

Databases

RDS

Amazon Relational Database Service (RDS) is a managed database service that lets you run relational database systems in the cloud. RDS takes care of setting up the database system, performing backups, ensuring high availability, and patching the database software and the underlying operating system. RDS also makes it easy to recover from database failures, restore data, and scale your databases to achieve the level of performance and availability that your application requires.

DynamoDB

DynamoDB is a managed nonrelational database service that can handle thousands of reads and writes per second. It achieves this level of performance by spreading your data across multiple partitions. A partition is a 10 GB allocation of storage for a table, and it’s backed by solid-state drives in multiple availability zones.

Redshift

Redshift is Amazon’s managed data ware- house service. Although it’s based on PostgreSQL, it’s not part of RDS. Redshift uses columnar storage, meaning that it stores the values for a column close together. This improves storage speed and efficiency and makes it faster to query data from individual col- umns.

Networking

VPCs

Like a traditional network, a VPC consists of at least one range of contiguous IP addresses. This address range is represented as a Classless Inter-Domain Routing (CIDR) block. The CIDR block determines which IP addresses may be assigned to instances and other resources within the VPC. You must assign a primary CIDR block when creating a VPC. After creating a VPC, you divide the primary VPC CIDR block into subnets that hold your AWS resources.

Direct Connect

The AWS Direct Connect service uses PrivateLink to offer private, low-latency connec- tivity to your AWS resources. One advantage of Direct Connect is that it lets you bypass the Internet altogether when accessing AWS resources, letting you avoid the unpredictable and high latency of a broadband Internet connection. This approach is useful when you need to transfer large data sets or real-time data, or you need to meet regulatory requirements that preclude transferring data over the Internet.

Application Integration

API Gateway

If you need to build an application that consumes data or other resources existing within the AWS platform, you can make reliable and safe connections using either RESTful or WebSocket APIs. API Gateway lets you publish data coming out of just about any AWS ser- vice for use in your IoT device fleets, web or mobile applications, or monitoring dashboards.

Simple Notification Service (SNS)

SNS is a notification tool that can automate the publishing of alert topics to other services (to an SQS Queue or to trigger a Lambda function, for instance), to mobile devices, or to recipients using email or SMS.

Simple Workflow (SWF)

SWF lets you coordinate a series of tasks that must be per-formed using a range of AWS services or even nondigital (meaning human) events.

Simple Queue Service (SQS)

SQS allows for event-driven messaging within distributed systems that can decouple while coordinating the discrete steps of a larger process.The data contained in your SQS messages will be reliably delivered, adding to the fault- tolerant qualities of an application.

Application management

CloudWatch

CloudWatch can be set to monitor process performance and resource utilization and, when preset thresholds are met, either send you a message or trigger an automated response.

CloudFormation

This service enables you to use template files to define full and complex AWS deployments. The ability to script your use of any AWS resources makes it easier to automate, stan- dardizing and speeding up the application launch process.

CloudTrail

CloudTrail collects records of all your account’s API events. This history is useful for account auditing and troubleshooting purposes.

Config

The Config service is designed to help you with change management and compliance for your AWS account. You first define a desired configuration state, and Config evalu- ates any future states against that ideal. When a configura- tion change pushes too far from the ideal baseline, you’ll be notified.

AWS Well-Architected Framework

1. Operational excellence

- Perform operations as code

- Make frequent, small, reversible changes

- Refine operations procedures frequently

- Anticipate failure

- Learn from all operational failures

2. Security

- Implement a strong identity foundation (least privilege principle)

- Maintain traceability

- Apply security at all layers

- Automate security best practices

Implementation of controls that are defined and managed as code in version-controlled templates

- Protect data in transit and at rest

- Keep people away from data

- Prepare for security events

Run incident response simulations and use tools with automation to increase your speed for detection, investigation, and recovery

3. Reliability

- Automatically recover from failure

- Test recovery procedures

- Scale horizontally to increase aggregate workload availability

Replace one large resource with multiple small resources to reduce the impact of a single failure on the overall workload. Distribute requests across multiple, smaller resources to verify that they don’t share a common point of failure.

- Stop guessing capacity

In on-premises scenarios, resource saturation leading to workload failure is often caused by exceeding capacity, while in the cloud, monitoring and automated adjustments of resources enable efficient demand satisfaction without over- or under-provisioning, within certain limits and managed quotas.

- Manage change in automation

4. Performance efficiency

- Democratize advanced technologies

Make advanced technology implementation smoother for your team by delegating complex tasks to your cloud vendor

- Go global in minutes

- Use serverless architectures

- Experiment more often

- Consider mechanical sympathy

Understand how cloud services are consumed and always use the technology approach that aligns with your workload goals. For example, consider data access patterns when you select database or storage approaches.

5. Cost optimization

- Implement Cloud Financial Management

- Adopt a consumption model

- Measure overall efficiency

- Stop spending money on undifferentiated heavy lifting

- Analyze and attribute expenditure

You can use cost allocation tags to categorize and track your AWS usage and costs. When you apply tags to your AWS resources (such as EC2 instances or S3 buckets), AWS generates a cost and usage report with your usage and your tags.

6. Sustainability

- Understand your impact

- Establish sustainability goals

- Maximize utilization

- Anticipate and adopt new, more efficient hardware and software offerings

- Use managed services

- Reduce the downstream impact of your cloud workload

References