the problem

so i was working on my PXE boot with iSCSI storage setup, and everything seemed perfect. but there were some things that were missed to make it work after system reboot.

what went wrong

- container services refusing to start

- kubernetes nodes stuck in

NotReadystate - having to manually fix things after every single reboot

if you’re dealing with a similar mess where your PXE/iSCSI setup works until you reboot, this guide should help you out. the issue usually boils down to timing problems between iSCSI connections, mount points, and when services try to start up.

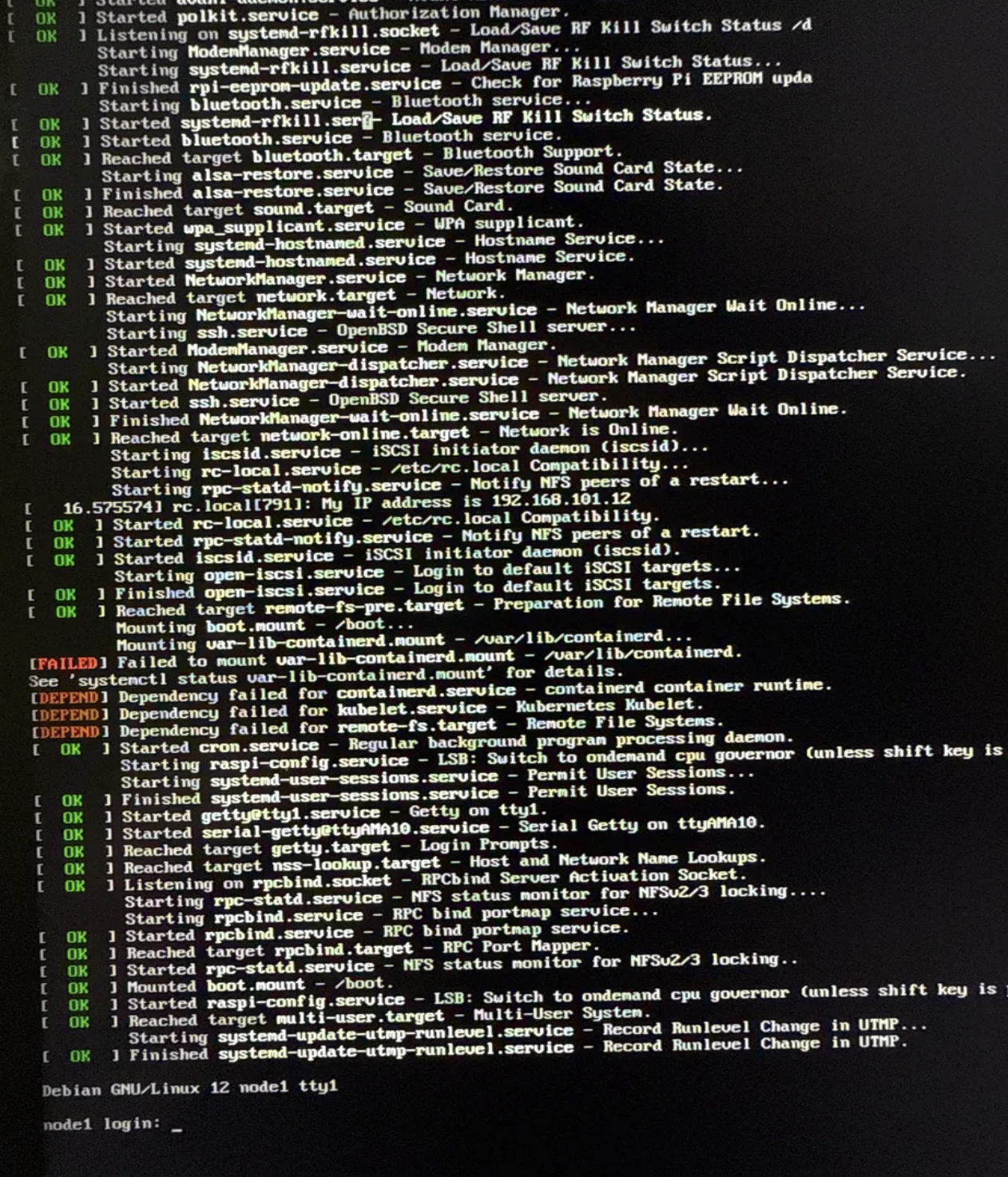

kubernetes node going haywire

here’s what happened during what should have been a simple reboot of my kubernetes node:

boot log:

[FAILED] Failed to mount var-lib-containerd.mount - /var/lib/containerd

[DEPEND] Dependency failed for containerd.service - containerd container runtime

[DEPEND] Dependency failed for kubelet.service - Kubernetes Kubelet

system state:

$ sudo systemctl --failed

UNIT LOAD ACTIVE SUB DESCRIPTION

● var-lib-containerd.mount loaded failed failed /var/lib/containerd

● containerd.service loaded failed failed containerd container runtime

● kubelet.service loaded failed failed Kubernetes Kubelet

$ df -h | grep -E "(containerd|kubelet|pods)"

# No output - nothing mounted

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

node1 NotReady control-plane 45d v1.28.2

step-by-step fix

here’s how to actually solve this mess:

- setting iSCSI to auto-connect

- fixing the mount configuration

- setting up proper service dependencies (the crucial part!)

step 1: fix iSCSI auto-connection

first, make sure your iSCSI target actually reconnects when you boot:

sudo iscsiadm -m node -T iqn.2000-01.com.synology:nas.Target-node1 \

--op update -n node.startup -v automatic

sudo iscsiadm -m node -T iqn.2000-01.com.synology:nas.Target-node1 \

--show | grep node.startup

# Should output: node.startup = automatic

sudo systemctl enable iscsid

sudo systemctl enable open-iscsi

step 2: mount the disks

after establishing the iSCSI connection, you should see 3 disks:

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 10G 0 disk

sdb 8:16 0 11G 0 disk

sdc 8:32 0 5G 0 disk

format them:

mkfs.ext4 -L containerd /dev/sda

mkfs.ext4 -L logs /dev/sdb

mkfs.ext4 -L kubelet /dev/sdc

mount them where they belong:

sudo mount /dev/sdc /var/lib/containerd

sudo mount /dev/sda /var/lib/kubelet

sudo mount /dev/sdb /var/log/pods

now edit /etc/fstab and add these lines (replace UUIDs with your actual values):

make sure to set the _netdev option because this is network storage:

UUID=c911f6ed-52d2-4e04-a18a-cb026ed86aeb /var/lib/containerd ext4 defaults,_netdev 0 2

UUID=317346de-f632-4f5d-9ec0-90770f56938d /var/lib/kubelet ext4 defaults,_netdev 0 2

UUID=d35111a5-f56a-4bde-a731-87e630fa0aed /var/log/pods ext4 defaults,_netdev 0 2

step 3: set up service dependencies

here’s the crucial part. we need services to start in the right order: iSCSI first, then mounts, then containerd, then kubelet.

why this order matters:

- iSCSI services need to establish the network connection to your storage

- mount points need the iSCSI disks to be available

- containerd is the container runtime that manages all your containers and images - it needs its storage (

/var/lib/containerd) to be mounted before it can start - kubelet is the kubernetes node agent that talks to containerd to actually run pods - it depends on containerd being healthy and also needs its own storage (

/var/lib/kubelet) for things like pod volumes and metadata

basically, kubelet is like the manager that tells containerd “hey, run this container,” and containerd is the worker that actually does it. but if containerd can’t access its storage, it can’t manage any containers, and then kubelet just sits there confused.

configure containerd to wait for everything it needs:

sudo mkdir -p /etc/systemd/system/containerd.service.d

sudo nano /etc/systemd/system/containerd.service.d/override.conf

[Unit]

After=iscsi.service iscsid.service open-iscsi.service

RequiresMountsFor=/var/lib/containerd /var/lib/kubelet /var/log/pods

[Service]

Restart=on-failure

RestartSec=5

configure kubelet to wait for iSCSI and containerd:

sudo mkdir -p /etc/systemd/system/kubelet.service.d

sudo nano /etc/systemd/system/kubelet.service.d/override.conf

[Unit]

After=iscsi.service iscsid.service open-iscsi.service containerd.service

RequiresMountsFor=/var/lib/kubelet /var/lib/containerd /var/log/pods

[Service]

Restart=on-failure

RestartSec=5

time to test with a reboot

sudo reboot

after rebooting, you should see the iSCSI connection established on your NAS, and df -h should show all your disks properly mounted.

wrapping up

honestly, these steps should be automated. my current kubernetes setup is based on kubernetes-the-hard-way, and during that installation i wrote ansible playbooks to automate most of it. so i’m definitely going to add one more ansible playbook for these iSCSI steps too.

just in case you’re doing something similar, i’d actually recommend considering kubespray instead. it’s doing pretty much the same thing - automating kubernetes setup with ansible on bare metal. so if you’re just starting this journey of building your own kubernetes cluster on bare metal, definitely check out kubespray.

but of course, it all depends on what you’re trying to achieve. sometimes the hard way teaches you more! ☕